RoboticsSoftwareEngineering 2022

September 7-9, 2022

GSSI - Gran Sasso Science Institute, L'Aquila-Italy

GSSI - Gran Sasso Science Institute, L'Aquila-Italy

| Home | Registration | Program | Directions |

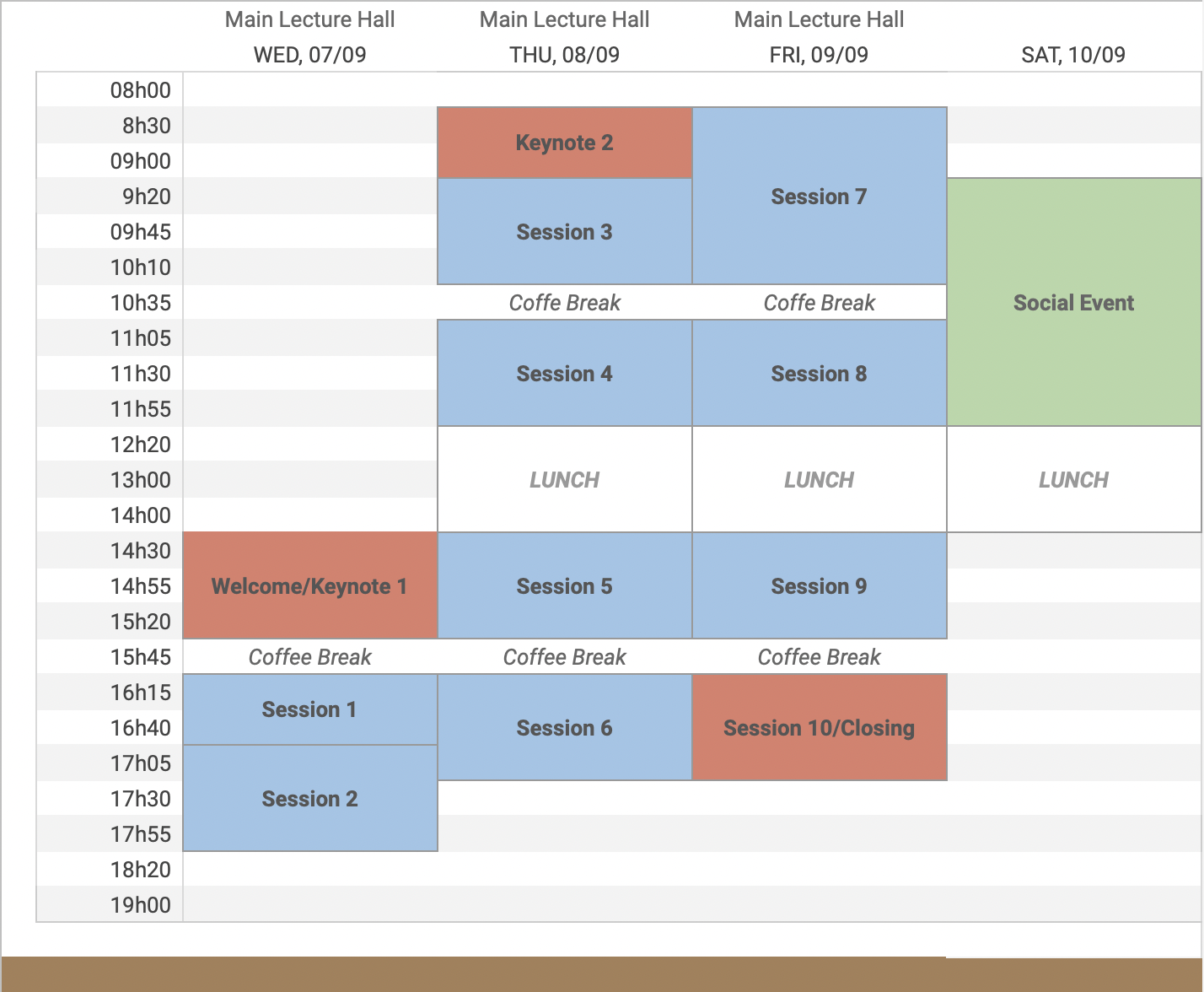

Participants should be prepared for no more than 12 minutes of presentation, such that we have enough time for discussions (approximately 13 minutes). A total of 25 minutes are reserved for each talk. Below, a detailed schedule for the RSE'22 meeting days. Please make sure to provide us with your slides before the start your session. You can mail your slides in PDF format (or link to Google Slides) to rsemeeting@gmail.com.

| 14:30 | Welcome |

| Organizers |

| 15:05 | Keynote 1: UAVs for remote sensing |

| Enrico Stagnini (Gran Sasso Science Institute - GSSI) | |

| 15:40 | Coffee Break |

| 16:15-17:05 | Session 1 |

| Chair: Patrizio Pelliccione |

| 16:15 | ChoCoBots: Choreography of Collaborative Robots |

| Marco Autili (University of L'Aquila) | |

| During the last two decades, service choreography has been receiving considerable attention by the research community as a versatile approach for building service-based distributed systems. In business computing, service choreography is a form of service composition in which the interaction protocol among software services is defined from a global perspective. By embracing the main characteristic of distributed systems, the idea underlying the notion of service choreography can be summarised as follows: "dancers dance following a global scenario without a single point of control". |

| 16:40 | Synthesis and Control of Autonomous Systems |

| Yehia Abd Alrahman (University of Gothenburg) | |

| The increasing adoption of robots in industry creates a demand to enable robots to interact in order to form teamwork Autonomous Systems - a set of collaborative agents that interact and behave as if they were a single capable agent, pursuing a set of joint goals. Teamwork plans are not only important because of workload sharing, but also due to the fact that the capabilities of a team exceed the ones of an individual. Correct-by-design techniques that ensure the safety and reliability of such systems are emerging, but are not that advanced. Namely, Reactive Synthesis and Supervisory Control are viable candidates. They permit producing robust controllers with assurances on correctness and guarantees on meeting design goals. |

| 17:05-18:20 | Session 2 |

| Chair: Thorsten Berger |

| 17:05 | Dolphin DSL for autonomous vehicles networks |

| Keila Mascarenhas de Oliveira Lima (Western Norway University of Applied Sciences) | |

| Autonomous vehicles are now used for a variety of scientific, military, and civilian applications. The piloting and control of these vehicles have gotten significantly easier alongside the evolution of the technology driving these vehicle's capabilities as the actuation, communication, and batteries, to name a few. In particular, these applications can now use robotic toolkits and their specific communication protocols to deploy several vehicles at once for a common purpose in a dynamic networked environment. |

| 17:30 | Digital twin and interoperability |

| Enxhi Ferko (Mälardalen University) | |

| Nowadays, digitalization fueled by the use of advanced technologies such as internet of things, artificial intelligence, robotics and automation is transforming an increasing number of sectors including manufacturing, automotive, healthcare and smart cities. |

| 17:55 | Flocks of birds from the bottom up |

| Luca Di Stefano (University of Gothenburg) | |

| Systems of agents may display a surprisingly complex collective behaviour that emerges as a result of the individuals interacting with each other. This kind of phenomenon is commonplace in nature, and by replicating it we would likely obtain a new generation of robust and adaptive robotic systems. However, developing such systems is challenging due to a lack of sufficiently high-level specification languages on one hand, and of adequate analysis tools on the other. In this talk, we describe a simple language and associated tooling that may address these lacks. |

| 08:30 | Keynote 2: Satellite OnBoard SW, Architecture and Validation |

| Paolo Serri (Thales Alenia Space, L'Aquila, Italy) | |

| 09:20-10:35 | Session 3 |

| Chair: Luciana Rebelo |

| 09:20 | Value-based Ecosystems |

| Patrizio Pelliccione (Gran Sasso Science Institute - GSSI) | |

| 09:45 | Ethical-aware Adjustable Autonomous Systems |

| Martina De Sanctis (Gran Sasso Science Institute - GSSI) | |

| Modern systems are increasingly autonomous thanks to the widespread use of AI technologies and their impact on the social, economic, and political spheres is becoming evident. Worries about the growth of the data economy and the increasing presence of AI-enabled Autonomous Systems (ASs) have shown that privacy concerns are insufficient: other ethical values and human dignity are at stake. Humans should be supported in controlling the system’s autonomy by enabling them to take the operational control of the system when they feel that it would be necessary or by assuring that the systems’ decisions comply with their ethical preferences, without violating regulations and laws. In this presentation, we introduce HALO, which enables users to express their moral preferences and, then, adjusting the system’s autonomy and the related interaction protocols. The customization of the system’s autonomy is guaranteed by a software mediator that, depending on the user’s ethical preferences, first determines the new level of autonomy and then (re-)distributes autonomy and control among the involved entities (e.g., system’s components, software agents, humans interacting with the system, ecc.). HALO is demonstrated with a robotic example. |

| 10:10 | Protecting user’s privacy and boosting cooperation with robots |

| Costanza Alfieri (University of L'Aquila) | |

| Machine learning techniques are widely used for speech recognition, image recognition and text mining, all set of data that presents a certain degree of concreteness. We are interested in understanding the scope of these techniques when applied on human data that represents their moral values and their ethical positions. The final goal is to profile user’s according to their ethical positions in order to predict their digital actions. Our research question is the following: is it possible to create a moral profile for each user? Which data and which machine learning techniques should be used? This profile should consider the user’s desiderata as well as their actual behavior in the digital world. Profiling and prediction of behaviors will be exploited to build a software exoskeleton to empower the user when interacting with the digital world. The main purpose of the exoskeleton is to help the user cooperating fairly with robots and autonomous systems, by protecting user’s privacy as well as fostering their collaboration. |

| 10:35 | Coffee Break |

| 11:05-12:20 | Session 4 |

| Chair: Patrizio Pelliccione |

| 11:05 | Design "moralware" for future robotics |

| Patrizio Migliarini (University of L'Aquila) | |

| The advent of a particular class of software that will manage human-computer interaction according to ethical principles is imminent. The European Data Protection Supervisor calls it "moralware." Never before has ethics been such a critical issue in designing a future in which humans and robots will work closely together, a collaboration of which we already see concrete examples, from space exploration to autonomous driving on some roads. In this area of joint research between philosophers and computer scientists, it is interesting to assess the state of the art of the many "human ethics" and how these can be implemented in the digital world to make the interaction between robots and humans fair, equitable, and respectful of the rights of individuals and communities. Starting with privacy as an ethical dimension, we began by investigating the possibility of bringing out values typical of humans in order to best ensure their respect. This approach can certainly be expanded to robotic design with the idea of creating machines that serve human beings, respecting their characteristics and uniqueness. |

| 11:30 | Testing Ethical Aspects of Robotic Systems |

| Mohammad Reza Mousavi (King's College London) | |

| In this presentation we start with an overview of the ethical frameworks and different ethical concerns arising from them. We then present some ideas from our ongoing research on testing ethical concerns. |

| 11:55 | Towards Ethical Robotic inspired by psychology |

| Massimiliano Palmiero (University of L'Aquila) | |

| In these last years the use of robots as increasingly more autonomous machines that assist and help humans in daily activities has increased (e.g., semiautonomous flying robots or driver-less cars). This means that robots must be equiped not only with cognitive abilities but also with ethical capacities, that garantee safe interactions with humans. In general, previous approaches faced this issue relying on the verification of logic statements. More recently, the idea that robots should be able of modelling and therefore predicting the consequences of actions to behave compatibly with humans' needs, that is without harming humans or even themselves, has emerged. At the aim, the simulation approach has been used to equipe robots with ethics. This approach assumes that modal simulations can arise in the brain, as partial reactivations of sensori-motor and introspective states formed on the basis of experience (e.g., mental imagery is the best-known case of non-automatic simulation). Thus, thinking would imply building a grounded model of the environment, which is not composed of abstract symbols but rather by perceptual (modal) symbols, by which robots can covertly simulate actions and their consequences. In other words, giving analogic mental representations to robots can be the first step towards ethical robots. In this vein, the role of the mirror neurons might be also explored to implement ethical actions in robots. |

| 12:20 | Lunch |

| 14:30-15:45 | Session 5 |

| Chair: Davide Brugali |

| 14:30 | Engineering Transparent Robots with Provenance-Based Methods |

| Nico Hochgeschwender (Bonn-Rhein-Sieg University) | |

| I will introduce the concept of transparency for robotic and autonomous systems and I will argue why it should become a quality attribute for robotic systems. My proposition is that robot software engineers should aim for establising transparency-by-design such that one can support after deployment assessment and assurance activities of various stakeholders involved in the development, deployment and maintenance of robotic systems. In addition, I will describe how we realized transparency for a mobile and autonomous logistic robot. Based on the lessons learned I will propose the concept of provenance as way to provide a systematic approach to realize the vision of transparency-by-design. |

| 14:55 | Using behaviour trees as a test oracle for system-level tests |

| Argentina Ortega Sainz (Hochschule Bonn-Rhein-Sieg/Ruhr University Bochum) | |

| One challenge of testing autonomous systems is specifying the "correctness" of a robot's behaviour. This problem, known as the test oracle problem, stems from informal and potentially incomplete system specifications, the engineering complexity of the system, non-determinism and uncertainty, and the large state-space of the operational environment. |

| 15:20 | Discoveries about behavior trees and state machines |

| Razan Ghzouli (Chalmers University of Technology) | |

| Robots are increasingly used to perform tasks ranging from simple pick-and-place to fire fighting in dangerous areas or disinfection in contaminated hospitals. With the rising complexity of robotic missions and their integration in human life, more research is needed to ensure the safety of mission execution and the compliance with user requirements. Different practices have been deployed by robotic developers when designing robotics missions and systems, which have been mainly ad-hoc driven. Missions constrains and assumptions are glued to the implementations code with no model to communicate the missions flow to non-expert users, or even developers who are not actively involved in the project. Unfortunately, these craftsmanship practices have led to hard maintained systems and non-reusable components. |

| 15:45 | Coffee Break |

| 16:15-17:30 | Session 6 |

| Chair: Thorsten Berger |

| 16:15 | Bias Detection and Mitigation in Artificial Intelligence |

| Oleksandr Bezrukov (Gran Sasso Science Institute - GSSI) | |

| 16:40 | Holistic editing support and analysis of robotic systems |

| Sven Peldszus (Ruhr-University Bochum) | |

| Robotic systems are highly modularized and support dynamic reconfiguration. Usually, the single modules are independent programs that communicate through a middleware, e.g., the Robotic Operating System (ROS). In such middlewares, the communication among the individual modules is often realized based on a topic-based publish-subscribe pattern. As the dependencies resulting from the data flows of the communication are implicit, this kind of communication is a burden for the efficient development but also static and dynamic analysis of the developed robotic system. Unlike explicit method calls, developers cannot simply navigate to the location from where a specific message originates. The same applies to static analysis and run-time monitors within the scope of a module, e.g., for detecting unwanted feature interaction. Furthermore, a robotic system's modules can be implemented using multiple programming languages, and due to the reconfigurability, dependencies can change at run-time. These characteristics additionally harden static and dynamic analyses. Accordingly, we need means to make such communication explicit for providing developers with editing support and static analyses as well as for developing hand-over mechanisms for run-time monitors within the scope of individual modules. |

| 17:05 | Seamless Variability Management With the Virtual Platform |

| Wardah Mahmood (Chalmers / University of Gothenburg) | |

| Software systems often need to exist in many variants in order to satisfy varying customer requirements and operate under varying software and hardware environments. These variant-rich systems are most commonly realized using cloning, a convenient approach to create new variants by reusing existing ones. Cloning is readily available, however, the non-systematic reuse leads to difficult maintenance. An alternative strategy is adopting platform-oriented development approaches, such as Software Product-Line Engineering (SPLE). SPLE offers systematic reuse, and provides centralized control, and thus, easier maintenance. However, adopting SPLE is a risky and expensive endeavor, often relying on significant developer intervention. Researchers have attempted to devise strategies to synchronize variants (change propagation) and migrate from clone & own to an SPL, however, they are limited in accuracy and applicability. Additionally, the process models for SPLE in literature, as we will discuss, are obsolete, and only partially reflect how adoption is approached in industry. Despite many agile practices prescribing feature-oriented software development, features are still rarely documented and incorporated during actual development, making SPL-migration risky and error-prone. |

| 08:40-10:35 | Session 7 |

| Chair: Mohammad Reza Mousavi |

| 08:40 | Fabricatable Machines: How to build and control them |

| Rogardt Heldal (Western Norway University of Applied Sciences) | |

| 09:00 | Rational Agents in Environments with Common Resources |

| Nicolas Troquard (Free University of Bozen-Bolzano) | |

| Turn-based games on graphs are games where the states are controlled by one and only one player who decides which edge to follow. Each player has a temporal objective that he tries to achieve. One player is the designated ‘controller’, whose objective captures the desirable outcomes of the whole system. Cooperative rational synthesis is the problem of computing a Nash equilibrium that satisfies the controller’s objective. We present recent results about this problem in presence of common resources, where each action has a cost or a reward on the shared common pool resources. We consider two types of agents: careless and careful. Careless agents only care for their temporal objective, while careful agents also pay attention not to deplete the resources. |

| 09:20 | Reactive Synthesis in a rich environment |

| Shaun Azzopardi (University of Malta) | |

| Reactive synthesis provides us with tools to construct, when they exist, controllers that can give certain guarantees when working in a certain environment. Both the restrictions imposed on and by the environment, and the guarantees the controller aims to achieve are specified in LTL. LTL specifications do not come for free, instead to apply reactive synthesis to the real-world we need to encode our objects of interest (the environment, and the goal/guarantee desired in the context of that environment) in LTL. A problem is that some aspects of the environment, e.g., other robots or programs, may exhibit behaviour not fully expressible in one single (finite) LTL specification. The problem then is what level of abstraction is needed to be able to fully determine realisability/controllability of the specification. |

| 09:45 | Explaining robots and autonomous systems verification results using causal analysis |

| Hugo Araujo (King's College London) | |

| We present a formal theory for analysing causality in cyber-physical systems - particularly, robots and autonomous systems (RAS). To this end, we extend the theory by Halpern and Pearl to cope with the continuous nature of such systems. Based on our theory, we develop an analysis technique that is used to uncover the causes for counterexamples of failures resulting from verification techniques. We develop a search-based technique to efficiently produce such causes, implement the technique in a prototype tool and apply it to our case studies. |

| 10:10 | Non-Functional Requirements in Robotic Systems |

| Davide Brugali (University of Bergamo) | |

| The design of the software architecture is driven by two types of requirements: Functional Requirements specify what the software system does, while Non-Functional Requirements (NFRs) express desired qualities, called Non-Functional Properties (NFPs). The talk illustrates three research challenges for addressing NFRs in the engineering process of autonomous robotic systems related to identification, modeling, and analysis of robotic-specific NFRs. |

| 10:35 | Coffee Break |

| 11:05-12:20 | Session 8 |

| Chair: Nico Hochgeschwender |

| 11:05 | Self-adaptive testing in the field: are we there yet? |

| Samira Silva (Gran Sasso Science Institute - GSSI) | |

| Testing in the field is gaining momentum, as a means to identify those faults that escape in-house testing by continuing the testing even while a system is operating in production. Among several approaches that are proposed, this paper focuses on the important notion of self-adaptivity of testing in the field, as such techniques clearly need to adapt in many ways their strategy to the context and the emerging behaviors of the system under test. In this work, we investigate the topic by conducting a scoping review of the literature on self-adaptive testing in the field. We rely on a taxonomy organized in a number of categories that include the object to adapt, the adaptation trigger, its temporal characteristics, realization issues, interaction concerns, the classification of field-based approach, and its impact/cost. Our study sheds light on self-adaptive testing in the field by identifying related key concepts and key characteristics and extracting some knowledge gaps to better guide future research. |

| 11:30 | Continuous Compliance for Automotive |

| Tiziano Santilli (Gran Sasso Science Institute - GSSI) | |

| An overview about the Continuous Compliance in Software Engineering. We discuss 5 case studies related to the Automotive domain, to which a Continuous Compliance approach could benefit. |

| 11:55 | Guidance for gaining confidence in ROS-based Applications |

| Ricardo Caldas (Chalmers University of Technology) | |

| The Robot Operating System (ROS) is the de-facto standard in robotics research. In fact, in 2019, the International Federation of Robotics (IFR) estimated that 70\% of the robotic applications are, in practice, based on ROS. The growing success of ROS may be attributed to its design for modularity. The modular design in ROS facilitates interoperability of reusable software artifacts from varied manufacturers. It also promotes late binding software components for dealing with dynamicity--a recurring problem in robotics. Interoperability and late-binding empower designers of ROS-based applications but pose challenges to trustworthy robotics behavior. After all, the emerging behavior of combining the components is left to runtime, asking to gain confidence with runtime assurance techniques. Employing runtime assurance is work for the quality assurance and development teams. On the one hand, the quality assurance team of a ROS-based application must deal with black-box components, unpredictable ordering of the modules, and interaction with the physical environment. On the other hand, the development team should care to alleviate for observability and controllability, preparing the robot code for further assurance provisioning. This study synthesizes guidance to the quality assurance and design teams in delivering sound ROS-based systems. To this end, we combine (i) a literature review on studies addressing runtime assurance (i.e., runtime verification and field-based testing) to gain confidence in ROS applications and (ii) mining design principles from observable and controllable ROS-based applications repositories. In this presentation, I should discuss early findings and results of our investigations. |

| 12:20 | Lunch |

| 14:30-15:45 | Session 9 |

| Chair: Patrizio Pelliccione |

| 14:30 | Towards the application of Markov Chains to Prioritize Test Cases |

| Luciana Rebelo (Gran Sasso Science Institute - GSSI) | |

| Context: Software Testing is a costly activity since the size of the test case set tends to increase as the construction of the software evolves. Test Case Prioritization (TCP) can reduce the effort and cost of software testing. TCP is an activity where a subset of the existing test cases is selected in order to maximize the possibility of finding defects. On the other hand, Markov Chains representing a reactive system, when solved, can present the occupation time of each of their states. The idea is to use such information and associate priority to those test cases that consist of states with the highest probabilities. Objective: The objective of this paper is to conduct a survey to identify and understand key initiatives for using Markov Chains in TCP. Aspects such as approaches, developed techniques, programming languages, analytical and simulation results, and validation tests are investigated. Methods: A Systematic Literature Review (SLR) was conducted considering studies published up to July 2021 from five different databases to answer the three research questions. Results: From SLR, we identified 480 studies addressing Markov Chains in TCP that have been reviewed in order to extract relevant information on a set of research questions. Conclusion: The final 12 studies analyzed use Markov Chains at some stage of test case prioritization in a distinct way, that is, we found that there is no strong relationship between any of the studies, not only on how the technique was used but also in the context of the application. Concerning the fields of application of this subject, 6 forms of approach were found: Controlled Markov Chain, Usage Model, Model-Based Test, Regression Test, Statistical Test, and Random Test. This demonstrates the versatility and robustness of the tool. A large part of the studies developed some prioritization tool, being its validation done in some cases analytically and in others numerically, such as: Measure of the software specification, Optimal Test Transition Probabilities, Adaptive Software Testing, Automatic Prioritization, Ant Colony Optimization, Model Driven approach, and Monte Carlo Random Testing. |

| 14:55 | Model-based Performance Analysis for Architecting Cyber-Physical Dynamic Spaces |

| Riccardo Pinciroli (Gran Sasso Science Institute - GSSI) | |

| Architecting Cyber-Physical Systems is not trivial since their intrinsic nature of mixing software and hardware components poses several challenges, especially when the physical space is subject to dynamic changes, e.g., paths of robots suddenly not feasible due to objects occupying transit areas or doors being closed with a high probability. This paper provides a quantitative evaluation of different architectural patterns that can be used for cyber-physical systems to understand which patterns are more suitable under some peculiar characteristics of dynamic spaces, e.g., frequency of obstacles in paths. We use stochastic performance models to evaluate architectural patterns, and we specify the dynamic aspects of the physical space as probability values. This way, we aim to support software architects with quantitative results indicating how different design patterns affect some metrics of interest, e.g., the system response time. Experiments show that there is no unique architectural pattern suitable to cope with all the dynamic characteristics of physical spaces. Each architecture differently contributes when varying the physical space, and it is indeed beneficial to switch among multiple patterns for an optimal solution. |

| 15:20 | Cost-effective and real-time marine data quality control using machine learning |

| Thanh Nguyen (Western Norway University of Applied Sciences) | |

| Nowadays, marine data producers have to perform data quality control manually before publishing collected data. This process is labour-demanding, time-consuming, and may expose bad data to the public. Detecting anomalies is a suitable approach to automate this task. Unsupervised machine learning based on predictive model appears to be a cost-effective solution and has been increasingly employed for anomaly detection in recent years. However, existing methods encounter at least one of the following issues: empirically setting predefined thresholds to identify anomalous data, storing all residual errors to derive thresholds automatically and dynamically, and being inadaptive to data distribution changes. The first problem makes the method inflexible, while the others demand expensive computation power and worsen performance over time. In this paper, we propose a framework consisting of lightweight deep learning model based on Long short-term memory (LSTM) and unsupervised machine learning. The model is accompanied by a cost-effective dynamic thresholding function to determine anomalies in real-time. Our framework can address all the issues mentioned above and detect anomalies in marine time-series data in a real-time manner. |

| 15:45 | Coffee Break |

| 16:15-17:30 | Session 10 |

| Chair: Luciana Rebelo |

| 16:15 | Ideas for Advancing Feature Transplantation |

| Christoph Derks (Ruhr University Bochum) | |

| Reusing code is a common developer activity in software engineering. It has been practiced since the early days of programming and helps developers in building larger and more complex systems faster, the idea being that there is no need to reinvent the wheel. What can be achieved with a single copy-paste operation for simple methods grows a lot more complicated when dealing with reuse and incorporation of entire software features. The latter requires the extraction and transplantation of dependent code, called by the feature or required for calling it in the first place. In recent years, a new branch of research has been established, focussing on the automization of this task. While considerable advancements were achieved, existing approaches share some key limitations, such as being restricted to non-scattered features or requiring extensive user input, e.g., defining the insertion point for the transplanted code or mechanisms to assert correctness of transplantation. This talk will discuss these issues and explore ideas for advancing the state-of-the-art of feature transplantation. |

| 16:40 | From text to 3D: a DSL for recreating indoor environments, and derive new variations |

| Samuel Parra (Bonn-Rhein-Sieg University of Applied Sciences) | |

| To verify and validate mobile robots, which usually operate in dynamic environments, the stakeholders must argue effectively that the robot is able to perform all of its tasks safely. Developers can expose the system to as many different situations as possible to show that the system can operate in different conditions, but to do so in simulation is challenging. Creating a 3D model for simulation is time-consuming and requires knowledge of 3D modelling tools, but even for an experienced user of such tools recreating a real-world environment or creating multiple variations of one can become a difficult task to achieve, especially under time constraints. One way to reduce the time required to obtain 3D environments is to leverage the power of model-to-model transformations, and generate the 3D environments from a textual model. This talk introduces a modelling language and tooling that enables developers of mobile robot systems to describe and recreate an existing environment using the modelling language, and to later annotate probability distributions to features of the environment to derive new variations. The tooling makes it possible for developers to execute tests in simulation in multiple environments more efficiently. |

| 17:05 | Experiences from Teaching Autonomous Driving Systems |

| Thorsten Berger (Ruhr University Bochum) | |

| 17:30 | Closing |

| Organizers |